|

I am a first-year PhD student in Yale University, advised by Prof. Zhong Lin. Efficiency lies at the heart of computer science. My current focus is on Efficient AI System, where I am deeply committed to exploring approaches that bridge algorithmic advancements and system-level optimizations. Outside of research, I enjoy running, swimming and books on history and sociology. Email / Google Scholar / Github / Zhihu |

|

|

|

|

(*: Equal contribution) |

|

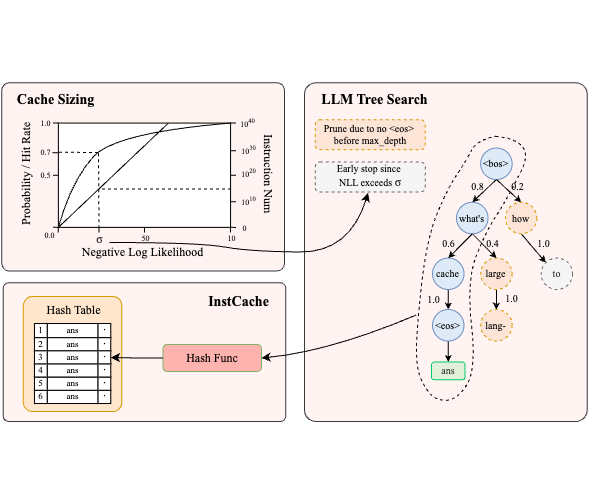

InstCache: A Predictive Cache for LLM Serving

Longwei Zou, Tingfeng Liu Kai Chen Jiangang Kong Yangdong Deng Preprint, 2025 arXiv |

|

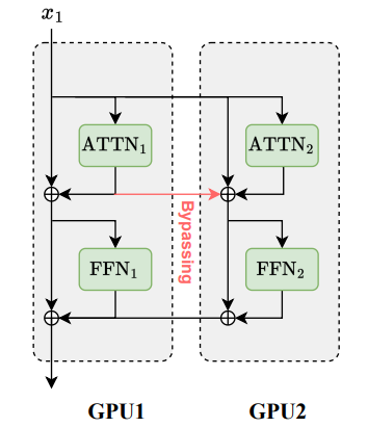

CQIL: Inference Latency Optimization with Concurrent Computation of Quasi-Independent Layers

Longwei Zou, Qingyang Wang Han Zhao Jiangang Kong Yi Yang Yangdong Deng ACL, 2024 github / arXiv |

|

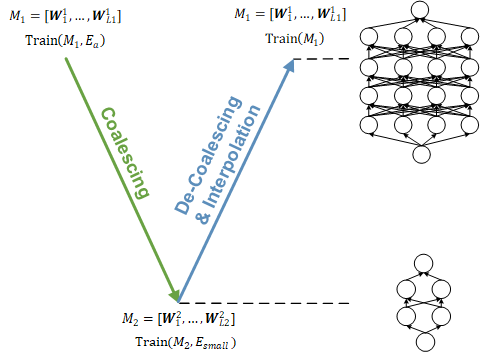

A Multi-Level Framework for Accelerating Training Transformer Models

Longwei Zou, Han Zhang Yangdong Deng ICLR, 2024 github / arXiv |

|

Design and source code from Jon Barron's website. |